II.1.5.1: The Reciprocal

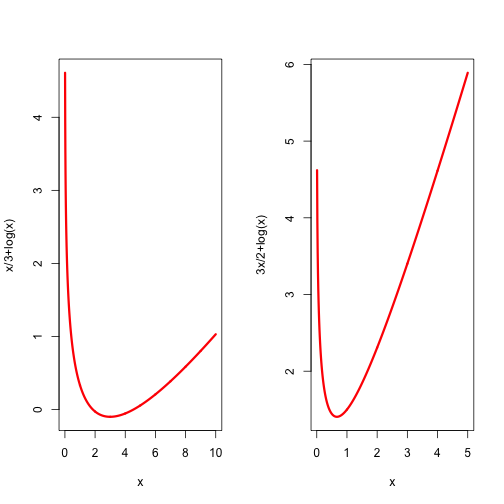

Minimizing the function , where > is a constant, over > is trivial. The first and second derivatives of are and We see from that is strictly convex on the positive reals. It has its unique minimum for , i.e. for , and the minimum value is .

Thus iterative algorithms to minimize the function, which can also be thought of as iterative algorithms to compute the reciprocal of a positive number , are of little interest in themselves. But it is of some interest to compare various algorithms, such as different majorization methods, in terms of robustness, speed of convergence, and so on.

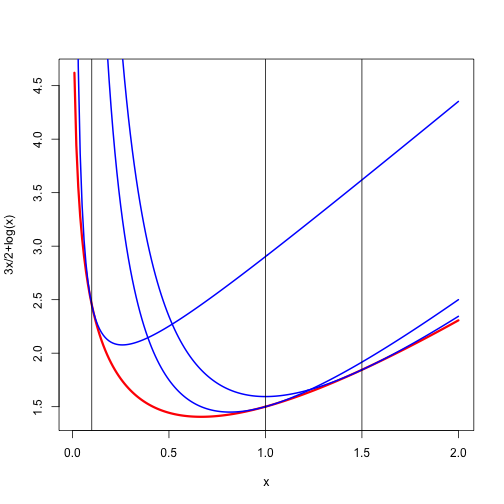

Here are plots of the function for and for .

Because the concavity of the logarithm shows that or Thus majorizes , and minimizing the majorizer gives the very simple algorithm The derivative of the update function at is . Thus our majorization iterations have linear convergence, with ratio . If the algorithm generates an increasing sequence converging to . If we have a decreasing sequence converging to . Because we have the explicit expression

Here we show the majorization for and equal to and .